Edge computing: Meeting the challenge of high-bandwidth IoT

Explore how edge computing processes high-bandwidth IoT data locally, cutting latency, reducing bandwidth costs, and enabling real-time AI applications like autonomous vehicles and smart cities.

The average IoT deployment now generates more data in a single day than entire data centers handled a decade ago. As 4K cameras, autonomous systems, and industrial sensors proliferate, the old model of sending every byte to the cloud has become physically and economically impossible.

Edge computing solves this by processing data where it's created, at or near IoT devices, rather than transmitting everything across expensive, bandwidth-constrained networks to distant cloud servers.

This article explores how edge computing transforms IoT connectivity requirements, reduces costs, and enables uses cases that simply couldn't exist under traditional cloud-centric architectures.

What is the IoT edge?

Edge computing processes and analyzes data close to where it's generated, at IoT devices or nearby gateways, instead of sending it hundreds or thousands of miles to centralized cloud data centers. The basic architecture of IoT edge computing follows a three-tier model:

- The device layer collects raw data from sensors, cameras, and connected equipment.

- The edge layer processes, filters, and analyzes data locally and makes real-time decisions.

- The cloud layer stores historical data, performs complex analytics, and trains AI models.

Previously, traditional cloud-centric IoT meant devices sent all data to the cloud, creating bandwidth bottlenecks and latency issues. With edge computing in IoT, data is processed locally, reducing the need for constant, high-volume data transmission to the cloud. This shift enables faster responses and more efficient use of network resources, making IoT edge computing an ideal technology for modern, high-bandwidth IoT deployments.

Why high-bandwidth IoT applications need edge computing

Modern IoT devices like 4K cameras, LiDAR sensors, industrial vision systems, and medical imaging equipment generate massive data volumes that overwhelm traditional connectivity. A single 4K security camera generates 6-8 GB per hour. Autonomous vehicles produce up to 4 TB of sensor data per day.

Sending all this data to the cloud is impractical for several reasons:

- Bandwidth constraints: Network links can't handle continuous high-volume transmission from thousands of devices

- Cost explosion: Cellular data costs become prohibitive at scale

- Latency requirements: Applications like autonomous vehicles need millisecond response times that cloud round-trips can't deliver

- Reliability concerns: Dependence on continuous cloud connectivity creates single points of failure

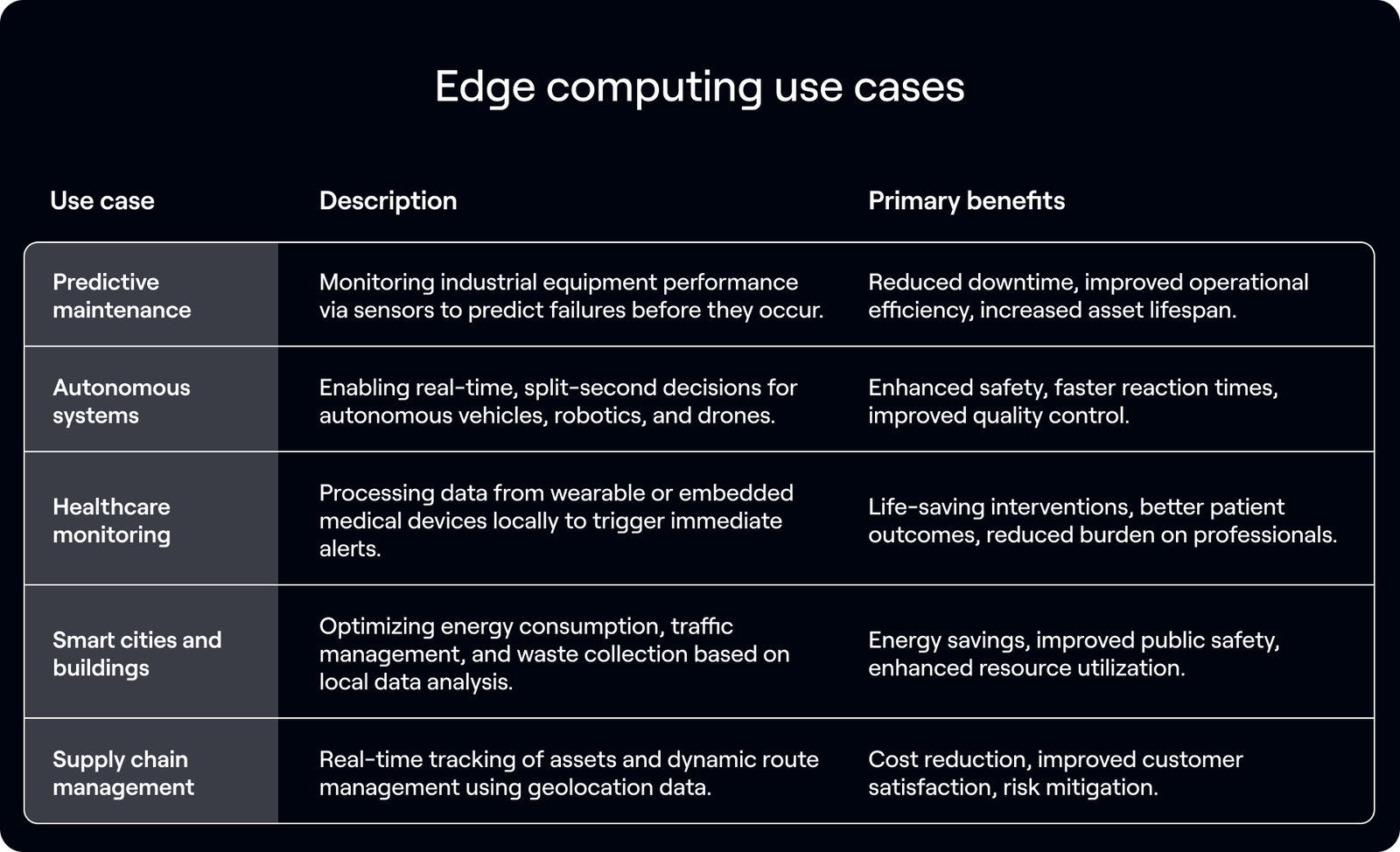

Specific use cases where centralized processing fails include manufacturing machine vision systems that inspect products at high speed and need instant feedback. Healthcare monitoring devices can't wait for cloud processing during emergencies. Traffic management systems respond immediately to changing conditions. Self-driving vehicles process sensor data and make decisions in under 100 milliseconds.

The paradigm shift is clear: the question is no longer "how do we get more bandwidth?" but "how do we use bandwidth more intelligently?" Edge computing for IoT is the answer, enabling organizations to process data where it's generated and only transmit what's necessary.

How edge computing reshapes connectivity requirements

Adopting edge computing and IoT fundamentally changes how networks are designed and used. Instead of building networks to carry all raw data to the cloud, organizations now create hierarchical systems where different types of data travel different distances.

The new connectivity model looks like this. Local or edge connectivity uses high-bandwidth, low-latency links like industrial Ethernet, Wi-Fi 6, or private 5G between devices and edge nodes to handle raw data. Backhaul connectivity uses moderate-bandwidth wide-area links like cellular or fiber to carry only processed insights, summaries, and alerts to the cloud. Edge systems decide what data is time-critical, process it locally, and determine what needs long-term storage in the cloud.

Consider a factory with 100 cameras. The traditional approach sends 100 cameras × 8 GB/hour = 800 GB/hour to the cloud, creating massive bandwidth and cost. The edge approach processes video at a local edge server and sends only alerts, metadata, and select clips, perhaps 10-20 GB/hour, to the cloud. That's a 97% bandwidth reduction.

This shift changes network design priorities from maximizing upload bandwidth from every device to optimizing local processing power and selective cloud communication. Edge computing enables operation during connectivity disruptions, so local systems continue functioning even when backhaul links fail. Global IoT connectivity providers like Hologram support this architecture by offering flexible, multi-carrier redundancy that ensures edge nodes maintain reliable backhaul when needed while optimizing data transmission costs.

Key benefits of edge computing for IoT connectivity

Edge computing delivers measurable advantages that directly address the technical and economic challenges of modern IoT deployments. From eliminating latency bottlenecks to slashing bandwidth costs by up to 95%, these benefits transform what's possible at scale. Here's how edge architecture solves the connectivity problems that make traditional cloud-centric approaches impractical.

Reduced latency for real-time applications

Latency is the time delay between data collection and actionable response. For critical applications like autonomous vehicles, where immediate decision-making is essential for safety, or industrial control systems and telemedicine, real-time responses must happen in milliseconds, not seconds. This need for speed is a primary driver for the adoption of edge computing in IoT.

Cloud round-trip latency, which involves sending data to a remote data center and waiting for a command back, typically ranges from 100 to 200 milliseconds, a delay that is unacceptable for time-sensitive operations. In contrast, local edge processing, which analyzes and acts on data closer to the source, can drastically reduce this delay, achieving ultra-low latencies generally in the range of 1 to 10 milliseconds, enabling true real-time performance.

Dramatic bandwidth savings and lower costs

By processing data locally and transmitting only essential information, organizations reduce bandwidth consumption by 70-95%. This translates to reduced cellular data charges, especially critical for remote deployments where connectivity is expensive. Lower infrastructure costs mean less demand on network links and fewer upgrades. Decreased cloud storage fees result from only relevant data reaching the cloud.

A logistics company with 1,000 GPS-enabled vehicles illustrates this well. Traditional continuous high-frequency location updates consume 500 GB/month × $10/GB = $5,000/month. Edge-optimized processing sends only route deviations and key events, reducing costs to 50 GB/month = $500/month.

Improved reliability and uptime

Edge systems don't depend on constant cloud connectivity to function, which is a major advantage for remote or mobile deployments. Network outages don't halt local processing and decision-making, ensuring continuous operation even in environments with unreliable internet access. Edge devices continue collecting and analyzing data, storing results locally, and syncing to the cloud when connectivity restores.

This buffering capability prevents data loss and maintains workflow continuity. Critical alerts and controls operate independently of backhaul status, meaning immediate, mission-critical responses are not delayed by network latency or failure. This high degree of autonomy is a core benefit of edge computing for IoT applications.

Enhanced security and data privacy

Sensitive data processed locally doesn't travek the public internet or sit in centralized cloud databases. This inherently reduces the attack surface for bad actors, as less data traveling across open networks means fewer interception opportunities for malicious entities. Processing data at the edge is also a key enabler for meeting stringent regulatory requirements, such as GDPR and HIPAA, which often place strict restrictions on the movement and geographical storage of personal and health data.

Additionally, sophisticated edge security analytics can detect and respond to anomalies and potential threats in real-time right where the data is generated, eliminating the critical delay that comes from waiting for analysis to be performed in a distant, centralized cloud environment. This combination of reduced data transit, compliance support, and rapid local threat response significantly enhances the overall security posture of any IoT implementation.

Scalability for massive IoT deployments

As IoT deployments grow from hundreds to thousands or millions of devices, centralized architectures become bottlenecks. Edge computing enables scale because each edge node handles processing for its local device cluster, distributing computational load. Adding devices doesn't proportionally increase backhaul bandwidth demand. Organizations scale horizontally by deploying additional edge nodes rather than upgrading centralized infrastructure.

For example, a cloud-centric model with 10,000 devices sending data to the cloud creates massive bandwidth, potential bottlenecks, and expensive infrastructure. An edge-distributed model with 10,000 devices across 100 edge nodes creates manageable local bandwidth, predictable backhaul, and cost-effective scaling.

Common challenges and how to overcome them

Managing distributed edge infrastructure

Unlike centralized cloud systems, edge deployments involve managing potentially hundreds or thousands of distributed nodes across different locations. Monitoring health and performance of remote edge devices, pushing software updates and security patches to distributed systems, and troubleshooting issues without physical access all present challenges.

Centralized orchestration platforms provide unified visibility and control across all edge nodes. Remote management capabilities enable over-the-air updates, remote diagnostics, and automated health checks. Containerization and virtualization technologies like Docker and Kubernetes enable consistent deployment and management across diverse edge hardware. IoT connectivity platforms with robust APIs and dashboards, like Hologram's real-time SIM fleet management, simplify monitoring and managing the connectivity layer of distributed edge deployments.

Ensuring security across edge nodes

Distributed edge nodes create more potential entry points for security threats compared to centralized, heavily fortified data centers. Physical security of edge devices in remote or accessible locations, ensuring consistent security policies across all nodes, and detecting and responding to compromised edge devices all present security concerns.

Zero-trust architecture authenticates and authorizes every connection and transaction. Encrypted communication secures all data transmission between devices, edge nodes, and cloud. Automated threat detection deploys edge-based security analytics that identify anomalies locally. Regular security updates through automated patching systems protect edge software. Hardware security modules provide tamper-resistant hardware for sensitive edge applications.

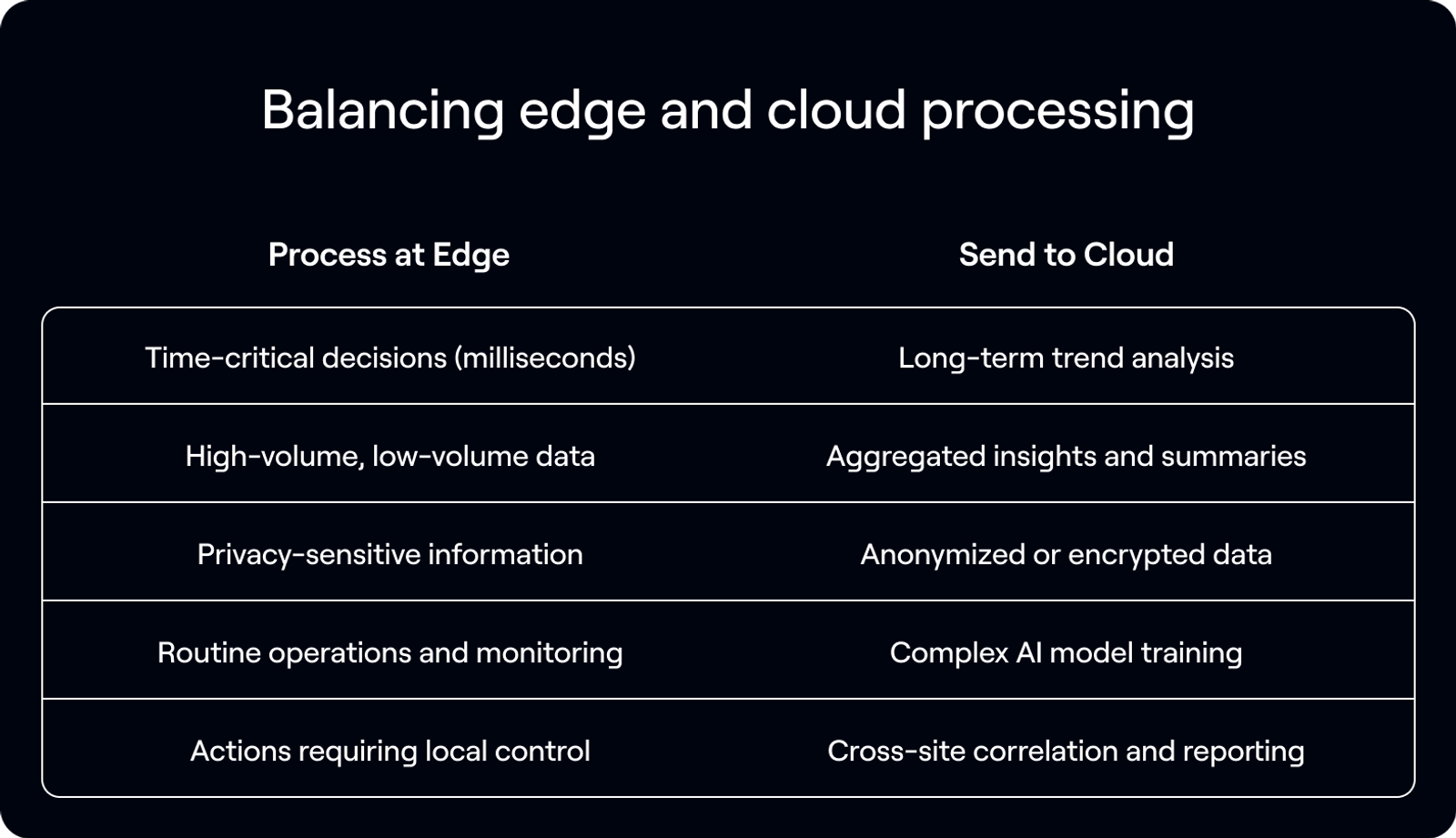

Balancing edge and cloud processing

Determining what to process at the edge versus what to send to the cloud isn't always obvious. A decision framework helps.

Start by identifying latency requirements, data volume, and privacy constraints for each use case. Begin with conservative edge processing, monitor performance, and adjust the edge/cloud split based on real-world results.

How 5G and advanced networks accelerate edge computing adoption

5G is the enabling technology that makes edge computing practical for more use cases. Edge computing requires fast, reliable connectivity to edge nodes, and 5G provides exactly that.

5G networks achieve 1-10ms latency compared to 30-50ms for 4G. This enables remote surgery where surgeons control robotic instruments over 5G with minimal delay. Autonomous vehicles coordinate driving through vehicle-to-everything (V2X) communication via 5G edge. Industrial automation allows wireless factory robots to achieve wired-equivalent response times.

Low latency complements edge processing, so even when edge nodes communicate with each other or with regional cloud resources, 5G keeps total latency within acceptable bounds.

Network slicing is 5G's ability to create multiple virtual networks on shared physical infrastructure, each optimized for specific requirements. For edge IoT, organizations can provision dedicated network slices with guaranteed bandwidth, latency, and reliability for critical edge applications.

- A healthcare slice guarantees low latency and high reliability for patient monitoring and telemedicine edge devices.

- An industrial slice prioritizes bandwidth and deterministic latency for manufacturing edge systems.

- A smart city slice optimizes for massive device connectivity and moderate bandwidth for municipal IoT sensors.

5G supports up to 1 million devices per square kilometer and multi-gigabit throughput. This supports edge computing by enabling high device density, so more sensors can feed each edge node without connectivity bottlenecks.

Bandwidth-intensive edge applications like 4K video analytics and high-resolution imaging get the throughput they need. Coverage reaches edge deployments in challenging environments like industrial facilities and remote locations.

AI compute at the edge is powering the next generation of IoT

The convergence of Artificial Intelligence (AI) and edge computing is fundamentally changing what is possible in IoT. Previously, AI model training was confined to the massive computational resources of the cloud. Now, specialized hardware and optimized models are enabling high-speed AI inference (the process of using a trained model to make a prediction or decision) to occur directly on edge devices or local gateways.

This shift to "AI at the Edge" is critical for modern IoT applications because it combines the real-time decision-making of edge computing with the predictive power of AI.

Real-time inference

AI models like computer vision, predictive maintenance, and natural language processing can make instantaneous decisions without the latency of a cloud round-trip. For example, a machine vision system can instantly detect a product defect on a manufacturing line, or a security camera can identify an unauthorized vehicle in milliseconds.

Data minimization

Instead of sending massive, raw data (like hours of video or millions of sensor readings) to the cloud for AI analysis, the edge device performs the analysis and only transmits the resulting insight, alert, or metadata. This drastically reduces the backhaul bandwidth requirements and lowers data transmission costs.

Operational autonomy

Edge AI enables devices to function intelligently even when completely disconnected from the network. This is crucial for remote or mission-critical applications like autonomous drilling equipment or deep-sea environmental monitoring.

Enhanced security

By performing sensitive AI analysis locally—such as facial recognition or proprietary process optimization—raw, private data never leaves the local network, greatly improving data privacy and compliance.

The core principle is that the "brain" (the trained AI model) is delivered to the "eyes and ears" (the sensors and edge devices). The cloud remains essential for the initial, compute-intensive training of complex AI models, but the execution (inference) is pushed to the edge, where the decisions matter most.

Edge AI in action

Edge computing is changing how cities like Raleigh, NC, are tackling traffic, and they launched a pilot program in January 2026 to prove it. Devices at intersections act as local brains. They look at video feeds to count cars and people walking and on the spot adjust the traffic light timings. This means optimization happens immediately, so there's less waiting. Plus, instead of sending all that video to the cloud, they only send summary data, like how many cars went by.

Raleigh sees this as a smart way to manage traffic and make streets safer without the headache of widening existing roads. Many of their main streets have enough capacity, but are just poorly managed by the old timing systems.

The city is also betting on this new AI-powered IoT to save a ton of money. For example, they've calculated that if they can improve the timing by one percent on a two-mile stretch of Capital Boulevard, a busy road with 60,000 drivers daily and six signals, they could save tens of thousands of dollars every day.

Plus, it's about improving the citizen experience. The city is confident that this AI is going to mean less gridlock, safer trips for everyone, and a huge cut down on that frustrating feeling we all get from sitting at a badly timed red light.

Building a successful edge computing strategy for IoT

Begin with a thorough use case assessment. Identify which IoT applications have the highest bandwidth costs, strictest latency requirements, or most critical reliability needs. Those are your best candidates for edge computing pilots.

Design your architecture for the edge-cloud continuum. Rather than thinking in terms of "edge versus cloud," map your data flows and processing requirements across device, edge, and cloud tiers based on latency, bandwidth, and computational requirements. This approach ensures each workload is handled in the most efficient location.

Choose connectivity that supports distributed architecture. Edge computing requires reliable connectivity to distributed nodes across diverse locations. Look for IoT connectivity providers that offer global coverage, multi-carrier redundancy, and flexible management tools. Hologram's platform provides high-speed, low-latency connections across 550+ carriers in 190+ countries, with robust API integrations for managing connectivity at scale.

Plan for scale from the start. Even if you're beginning with a pilot, design your edge architecture with growth in mind. Select edge hardware, orchestration platforms, and connectivity solutions that can scale horizontally as you add locations and devices. Prioritize security and manageability by implementing centralized management, monitoring, and security tools that give you visibility and control across all edge nodes.

Measure and optimize. Instrument your edge deployment to track key metrics like latency improvements, bandwidth savings, uptime gains, and cost reductions. Use this data to refine your edge/cloud processing split and justify expansion.

Ready to optimize your IoT connectivity for edge computing? Get started with Hologram (https://hologram.io/contact-sales/) to discuss your specific requirements.

FAQs about edge computing in IoT

Can edge computing work with my existing cloud infrastructure?

Yes, edge computing complements rather than replaces cloud infrastructure by handling time-sensitive processing locally while still leveraging the cloud for long-term storage, complex analytics, and centralized management. Most organizations adopt a hybrid edge-cloud architecture that optimizes where each type of processing occurs.

What happens to my edge devices when internet connectivity fails?

Edge devices continue operating independently during connectivity outages, processing data and making decisions locally, then synchronizing with the cloud when connectivity restores. This resilience is one of edge computing's key advantages over cloud-dependent architectures.

How much bandwidth can edge computing actually save for IoT deployments?

Bandwidth savings vary by application but typically range from 70-95% because edge systems process data locally and transmit only insights, alerts, and summaries rather than continuous raw data streams. Video analytics and high-frequency sensor applications see the most dramatic reductions.